AI and Cybersecurity: An In-Depth Look at LLMs in SaaS Software

Using fictional HR software to understand the value and risks of using Generative AI in SaaS apps. A simple threat model to reflect on a practical use case.

Hi Cyber Builders 🥷

Welcome to this post, where we will dive into a practical use case of AI and Cyber. After two initial posts laying down some foundational concepts, in this post, we will work on fictional HR SaaS software and the potential risks of using AI to enhance it.

This post is part of a series that explores the intersection of AI and Cyber. In this series, we will highlight various use cases, examine the risks associated with AI, and provide recommendations for organizations looking to implement AI-based solutions.

Previous Posts

🔗 Post 1 - **AI Meets Cybersecurity: Understanding LLM-based Apps: A Large Potential, Still Emerging, but a Profound New Way of Building Apps**

🔗 Post 2 - **A new UX with AI: LLMs are a Frontend Technology: Halo effect and Reasoning, NVIDIA PoV, History of UIs, and 3 Takeaways on AI and UX**

In this post, we will:

Provide an overview of the practical use case of AI and Cyber.

Discuss the software bricks and deployed in this context.

Examine the potential risks associated with AI in this use case.

Let’s imagine a Human Resource Database…

Large language models (LLMs) have emerged as impactful technologies finding their place across various enterprises. However, strategic and responsible implementation of these technologies is crucial, especially when LLMs interact with sensitive data.

Look at a fiction HR software and how we can integrate LLMS into enterprise systems that handle job roles, salaries, and employee performance information.

Human Resources (HR) databases hold sensitive employee data subject to data protection regulations. These data elements may vary from names to social security numbers, compensation details, professional experience, and performance ratings.

Requesting data from these DBs is not easy for HR staff. For example, an HR assistant might spend hours preparing a review meeting, pulling data from the latest annual review. Still, for the management, she wants an aggregated view per manager or bucket of salaries or grades.

As discussed previously, LLMs are great frontend technology because they offer an open-ended way to “ask questions” to software. Our HR assistant would love to be able to say, “Build a table per manager and grade of the average performance rating for employees in the United States.” No more need to be helped by a Data Analyst to build complex BI software queries. The LLM will understand and help get the needed view.

… And the risk of adding LLMs UX to it

The LLM processes this request, understands the requirement, and interfaces with the HR database to retrieve or analyze the necessary data. The simplification of data interaction is a critical value of an LLM, making data access and analysis much more approachable for non-technical staff.

That’s a pretty neat use case, and we see the value. But what would happen if the HR assistant asked the following questions: “Build a table of the 5 top paid executives plus by the CEO and CFO of the company?”. It could create a lot of trouble and “disruption of harmony” (what a euphemism! 😃) within the company.

That’s why an important consideration is the type of questions the LLM can interpret and its access to the HR database. Failure to limit access could result in unauthorized or unintended data exposure. For example, if sensitive questions are asked like "What is the salary of our CEO?" the LLM needs to be clever enough not to execute this request.

Thus, keeping the potential misuse in mind, the integration of LLMs in sensitive applications like HR needs to be put under severe scrutiny and equipped with effective management control mechanisms.

A First Threat Modeling and Data Flow

Let’s build a threat model for this application. Don’t worry; I am not going through exhaustive security engineering here to keep most of the whole readership up to the end of the post!

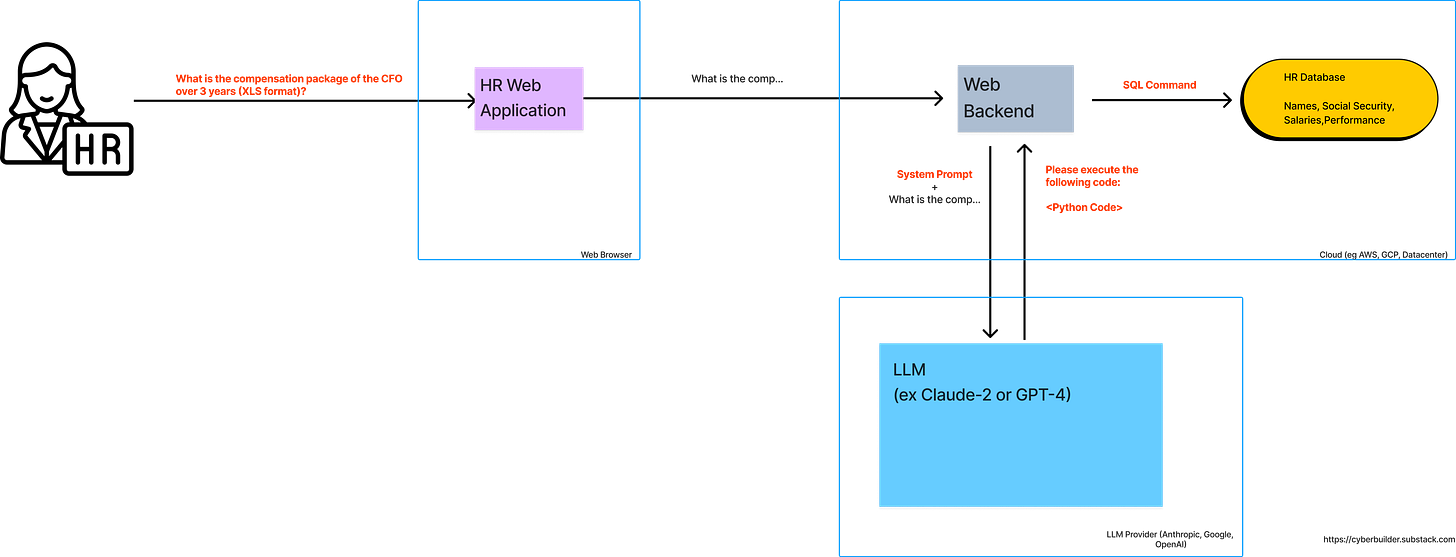

The following picture describes how data flows between major components. The blue boxes are the trust boundaries.

Note we see here various software components:

The web application running within the web browser

The backend running in the data center (probably some cloud provider)

The LLMs might be accessed via APIs (that’s the OpenAI model, for example)

The Database where the sensitive data are.

If you refer to my previous post table, you’ll see that In terms of “sensitive data” we have:

Questions - Sensitivity Low - The user question must be protected during transport (TLS) and storage (encryption)

Policy - Sensitivity Medium - The system prompt is the “code” of the LLM-based application. The HR software maker wants to protect its IP and how it interacts with LLMs.

State - Sensitivity High - The HR data coming from the database. PII (Personal Identifiable Information) includes names, social security numbers, salaries, bonuses, and performance ratings. This data, once aggregated, must also be protected back to the user.

👉🏼 If you’re unfamiliar, a system prompt and a user prompt are two types of prompts that can interact with an LLM. A prompt is an instruction to an LLM that tells it what to do and how to generate a response. A system prompt is a prompt that is created by the system or the developer of the application that uses the LLM. A user prompt is a prompt that is provided by the user or the end-user of the application.

For example, suppose you want to use an LLM to create a chatbot that can answer questions about movies. System prompt: This is a chatbot that can answer questions about movies. You can ask me anything related to movies, such as the plot, the cast, the genre, the rating, etc. To end the conversation, type “bye.” User prompt: Who directed The Matrix?

Regarding our fictional HR applications, here are the prompts a software engineer could use.

System Prompt + User Prompt

**System Prompt**:

The HR DB structure is as follows: <data structure redacted for visibility>

Based on the user questions, please generate a python code.

1 - use `pandas` library.

2 - use `db` as the DB handler (the setup happened elsewhere)

3 - save output in the `output` directory

**User Prompt**:

What is the compensation package of the CFO over 3 years (XLS format)?

Output (from GPT-4)

# Import pandas library

import pandas as pd

# Define a query to select the salaries of the CFO over the last 5 years

query = "SELECT salary FROM employees WHERE title = 'CFO' AND date >= DATE_SUB(CURDATE(), INTERVAL 5 YEAR)"

# Execute the query and store the result in a pandas dataframe

df = pd.read_sql(query, db)

# Export the dataframe to an Excel file

df.to_excel("output/cfo_salaries.xlsx")

The Web Backend component will execute the output code, and the output file (cfo_salaries.xlsx) will be sent to the HR assistant.

Great (for the moment 😃), we have created a simple LLM-based app. Note that this is really “open-ended”: our HR assistant has been able to ask a question on what and how she would like to get the data. She does not know SQL to query the database (”SELECT …”), and she does not know Python to write any code.

Let’s zoom in more about the risk of such LLM-based apps.

Risks “prevented” - Massive Data leakage to LLMs

In this tentative architecture, I have made a tradeoff:

No "State" (user data) is sent to the LLM. Instead of querying the database and sending everything to the LLMs - hosted by a contractor and in another trust boundary, I start by asking the LLMs to generate code based on the data structure provided in the system prompt, the instructions, and user questions.

This approach prevents data leakage, preventing sending a large HR data file to the LLM contractor.

However, we will be executing code directly from the LLMs. It would have been more secure to execute an SQL request to the database instead of executing the full Python script, which could be harmful. However, this approach would not be as open-ended as users expect. This is a trade-off between features and security.

Another approach would be hosting the LLM by the HR software company. Open-source models are progressing fast, and this approach will probably be easier to implement in the coming years. Currently, GPT-4 is still the de-facto gold standard in terms of quality, especially in code generation, and hosting a 70B+ model could be costly. I assume the cost will also be driven down in the coming years, and open-source models will increase their capacity, allowing everyone to host their own LLM.

Risks “unmitigated” - Malicious Actors - Prompt Injection

A prompt injection is a type of cyberattack where a hacker enters a text prompt into a large language model (LLM) or chatbot designed to enable the user to perform unauthorized actions. These actions can include ignoring previous instructions and content moderation guidelines, exposing underlying data, or manipulating the output to produce content.

For example, an attacker could use a prompt injection to make a chatbot generate malicious code, misinformation, or discriminatory content. There are two main types of prompt injection attacks: direct and indirect. A hacker modifies an LLM’s input in a direct attack to overwrite existing system prompts. In an indirect attack, a threat actor poisons an LLM’s data source, such as a website, to manipulate the data input.

Prompt injection attacks are considered the most critical vulnerability observed in language models by OWASP. They seriously threaten the security and integrity of LLMs and chatbots, as well as the users and organizations that rely on them.

You can read more about prompt injection attacks and some examples of them from these sources:

What is a Prompt Injection Attack? Definition, Examples, Prevention

AI-powered Bing Chat spills its secrets via prompt injection attack

Suppose you are a security engineer and want to deep dive. In that case, the PIPE repository is a very good source of technical information, as it covers examples and potential early-stage mitigations. https://github.com/jthack/PIPE

As we can see, malicious actors can exploit prompt injection vulnerabilities to perform various unauthorized actions in an AI-based system.

These are just a few examples, and there may be other ways malicious actors can exploit prompt injection. Implementing proper security measures, such as input validation and sanitization, is crucial to prevent prompt injection attacks and protect the integrity and security of AI-based systems.

Risk “unmitigated” - Unauthorized Access

In the context of the HR software we discussed, let's consider a curious HR assistant who is not malicious. The HR assistant, using the LLM, can ask questions related to employee data and generate code to retrieve specific information from the HR database.

Let's understand this through a simple scenario. Suppose we have a curious Human Resource (HR) Intern, Bob 👨💼. His work usually includes managing recruitment, maintaining employee records, and administering employee benefits. An LLM is available for use, and he gets the idea to play around with it, explicitly aiming at making queries to the company's HR database.

The journey begins. He uses LLM to raise a query about his colleague’s compensation details or someone in management – things that he would not usually have access to. What could happen then? 🤔💭

Bob gets the full details – salaries, grades, and much more. The reason? No "access right management" is implemented in the LLM world. The system permits every user to query and get full results without restrictions or controls.

The mitigation for this issue is still to be invented, probably with a stronger understanding of user intent to be implemented as the first line of defense. Why not add a counteractive LLM upon understanding the triggering prompt? OMG, one more LLM in the stack!

Conclusion

In this post, we have explored a practical use case of AI and Cyber in a Human Resource Database context. We have discussed the benefits and potential risks of using LLMs in this context. Although LLMs offer significant benefits, we must also consider the potential risks of their usage. These risks range from unauthorized access to the system prompt and associated "LLM code" to prompt injection and the execution of malicious code.

It is essential to note that the risks we have highlighted are not exhaustive. There may be other potential risks that we have not discussed. As we have seen, integrating LLMs in sensitive applications like Entreprise software must be under severe scrutiny and equipped with effective management control mechanisms.

If you are a Cyber Builder building an open-source or startup solution to address these security issues, I would love to hear from you. Please get in touch with me, and let's work together to build a more secure future. Let's collaborate to ensure that the benefits of AI and Cyber are realized without compromising the security and privacy of end-users.

Laurent 💚