AI Security Engineering Is Needed, Not Emotions

Discovering the Role of Security Engineering in Addressing Emerging Challenges within Artificial Intelligence

Good morning, afternoon, and evening, dear readers and followers. I am back at the writing desk for this new edition of Cyber Builders.

Cyber Builders is a comprehensive publication that covers various cybersecurity topics, such as AI, the cybersecurity ecosystem, the investor's market, and more. It's designed to provide valuable insights to those building new cybersecurity programs or products and security practitioners who want to stay up-to-date with the latest trends.

This week, I am discussing a hot topic for our industry. AI and how to react related to its threats. It is the first post of a series around generative AI and cybersecurity.

This first post describes threats such as privacy, confidentiality, and other general issues AI systems are adding. We should not be too emotional and stick to sound security engineering practices like threat modeling.

I am working on a more technical approach to the security of LLM-based applications and building a threat model for them. When application developers add LLMs to their applications, they add a new range of threats. If you are one of them, please get in touch with me (cyberbuilders@substack.com). I’d love to get a call and discuss your perspectives. I am also eager to chat about regulations and AI, as the EU and the USA are hurrying to publish something.

AI Concerns and Emotional Responses

The Group of Seven (G7 - the club of the wealthiest countries in the world) has recently called for responsible AI development, highlighting the need for a "risk-based" approach to artificial intelligence regulation. The digital ministers of G7 advanced nations agreed that this approach should be adopted as European lawmakers rush to introduce an AI Act enforcing rules on emerging tools such as ChatGPT.

The ministers recognized that AI technologies should be developed in an open and enabling environment based on democratic values. However, they also acknowledged that policy instruments to achieve the common goal of trustworthy AI might vary across G7 members.

The joint statement issued by G7 ministers at the end of a two-day meeting in Japan emphasized several issues crucial for responsible AI development. These issues include intellectual property, misinformation and its impact on democracy, and impersonation leading to criminal activities and fraud.

European Commission Executive Vice President Margrethe Vestager told Reuters ahead of the agreement:

"The conclusions of this G7 meeting show that we are not alone in this".

This statement highlights the significance of international cooperation in addressing the challenges presented by AI development.

"Pausing AI development is not the right response. Innovation should continue to develop within certain guardrails that democracies must establish."

In an interview with Reuters, said Jean-Noel Barrot, French Minister for Digital Transition. He also stated that France would provide some exceptions to small AI developers under the upcoming EU regulation.

This is one of the latest public statements about AI and the new threats it adds up to. The latest AI pessimist is one of his fathers, Geoffrey Hinton. Wired pictured him as an “AI doomer,” which is exaggerated as his point of view is more balanced.

The researcher left Google to raise awareness of the risks posed by intelligent machines. Hinton is alarmed by the rapid advancement of AI. He is apprehensive about near-term risks such as AI-generated disinformation campaigns, but he also believes we must start addressing long-term problems now. He said:

“We should continue to develop it because it could do wonderful things. But we should put equal effort into mitigating or preventing the possible bad consequences.”

Given the numerous public claims about the dangers of AI, one may wonder what we should do. Should we protest our representative and ask for an AI ban? Should we connect 24/7 on news channels and watch so-called experts talking about it? Is an AI shelter-in-place coming?

Instead, we should strive to be careful and rational in our approach. Rather than resorting to extreme measures, we can look to the cybersecurity community for guidance. For decades, we have utilized security engineering principles to develop and implement effective measures. By applying similar principles to AI development, we can work towards creating a safe and secure AI ecosystem that benefits society.

The Need For AI Security Engineering

Just as the cloud transformed how we store and access data, artificial intelligence (AI) is revolutionizing how we process and analyze data.

Since the emergence of the cloud as the leading software delivery platform, the security industry has had a love-hate relationship with it. On the one hand, many practitioners have tried limiting cloud usage within their organizations, fearing that sensitive and confidential data would be leaked outside the company perimeter. On the other hand, cyber product developers soon understood that the cloud has enormous potential for managing security telemetry at scale and updating software and knowledge databases.

Nowadays, the value of cloud-managed products is well understood, and security leaders appreciate their efficiency and overall cost savings. On-premise security products require updates; enterprises often pay system integrators to perform them within their network and systems. A cloud platform can update its software or data several times daily - a frequency many security operations need help dealing with. Customers finally love to offload costly operations to their vendors.

The same cycle has begun with AI technologies and the newest Large Language Models (LLMs), such as OpenAI's ChatGPT and Microsoft's Copilots (Github for code and Security Copilot for threat analysts). Many initial reactions are based on emotional responses. Some claim that despite the benefits of using public LLMs, too many risks are involved, and individuals and organizations must be cautious with the data they submit. Enterprises - the latest to date being Apple - have banned the use of ChatGPT out of fear of losing essential data.

I understand these reactions facing new technology, but I don’t think they are the correct long-term answer. We must put aside our emotions and work as engineers on the risk and threats AI technologies raise. We need a practical and holistic solution. We need Security Engineering.

A Short Intro to Security Engineering

Security Engineering focuses on designing, implementing, and maintaining secure systems to protect valuable information and resources from potential threats. This multidisciplinary approach combines various aspects of computer science, engineering, and risk management to create robust systems that can withstand known and emerging cyber risks. Risk assessment and threat modeling are central to security engineering and essential for understanding and mitigating vulnerabilities.

Risk assessment involves identifying, evaluating, and prioritizing potential risks to the organization's data, systems, and processes. By systematically analyzing various factors, such as the likelihood of occurrence, potential impact, and existing control measures, security engineers can develop tailored strategies to mitigate, accept or eliminate these risks.

Threat modeling is a proactive approach to identifying potential attack vectors that adversaries could exploit. It involves comprehensively representing a system's architecture and valuable assets. A systematic analysis highlights the inherent weaknesses that malicious actors may target. Security engineers use this information to develop strategies to defend against potential attacks and continuously improve security posture.

A "valuable asset" refers to any component within the infrastructure or information considered crucial for its operations, reputation, or financial stability. Valuable assets can be tangible or intangible and include various types of data, systems, processes, and intellectual property. These asset compromises could lead to significant adverse consequences.

A threat is any potential event, action, or circumstance that could compromise the asset's confidentiality, integrity, or availability. In other words, it is a potential danger that could negatively impact the security of crucial components, such as data, systems, processes, or intellectual property. Threats can originate from various sources, including external actors like cybercriminals, nation-state hackers, or internal factors like human error or system failures.

For example, a valuable asset for an e-commerce company might be its customer database containing sensitive information such as names, addresses, and payment details. This data is essential for the company’s daily operations and maintaining customer trust. Unauthorized access to this database - a threat - could result in financial loss due to fraud or identity theft and damage the company's reputation, leading to a loss of customers and revenue - the impact -.

By combining risk assessment and threat modeling, security engineering gives organizations a holistic understanding of their cyber risk landscape, enabling them to build resilient systems prioritizing privacy and security.

That being said, let’s dive into some macro threats that apply to AI systems.

Everyone talks about Ethical and Humanity Threats….

LLMs have higher stakes than previous technology like the cloud. These LLMs models could have significant ethical implications - Ethical Threats. For such a threat, the valuable assets are humanity and what we value as democracies: freedom, privacy, and equality. Ethical implications of AI include gender diversity, inclusiveness, and balanced political views.

Chase Hasbrouck offers a clear perspective on the various positions around AI Ethical Threats. I recommend reading his post. He split the groups of people into four groups - Existentialists, Ethicists, Pragmatists, and Futurists. Existentialists fear that AI will take over humanity. They make a plausible argument about the need to take AI alignment seriously, but they overestimate the likelihood of the risk. Ethicists rightly point out the real harms of AI that need to be addressed, but they provide no answer on how to solve these issues. Pragmatists suggest that learning as we go is the best approach to AI alignment, and a moratorium is impractical. Futurists are excited about AI's potential, but we will not see very soon the transformative impact they expect.

Some may say that Artificial General Intelligence (AGI) will exist soon. Yes, AI (especially LLM and generative AI fields) progresses quickly, but the reality is complex. It reminds me of the fearful persons interviewing me when I run Sentryo. As an OT security specialists, they always wondered, “Can a bad actor hack a power plant?” A question raised by fear and emotions. I always tried to answer with nuanced arguments. Yes, some OT devices can be hacked by default. Still, it is improbable someone will create a chain reaction across hundreds of subsystems in a nuclear plant, bypassing all the safety systems designed and engineered to prevent any chain reactions. But that answer rarely matched the expectations of interviewers. Perhaps it was not sensational enough!

When I pledge to AI Security engineering, I follow the same mindset—no fear-mongering, but a well-thought set of security measures based on likely threats.

On the philosophical side of it, sure, AGI will exist someday, but in the meantime, I think I’ll stick to Sarah Constantin's well-written essay: “Why I am not an AI dommer.”

Here is a quote from her

By contrast, banning the training of large machine learning models is harmful (because it coercively interferes with legitimate scientific and commercial activity) and is no more justified today than a ban on mainframes would have been in the 1960s. In my view, 2020’s AI is a typical new technology: it has risks and benefits, but the benefits probably predominate overwhelmingly, and enforced technological stasis has a brutal opportunity cost. […] We should let present-day AI develop freely, reap the prosperity that it makes possible, and figure out how to mitigate any harm as we go along.

… And Privacy Threats!

Privacy threats are when personally identifiable information (PII) is disclosed to a third party. For example, when you provide your social security number, name, and birth date to a website. Many fear seeing their data being leaked to large AI companies. They would reuse these data in their training set, mixing the PII within the AI models.

To that matter, OpenAI faces increasing scrutiny from European data protection regulators over its data usage policies. Recently, Italy requested that OpenAI comply with the European Union's General Data Protection Regulation (GDPR). User consent is one of the pillars of GDPR.

This move came after regulators accused OpenAI of collecting users' data without their consent and not giving them any control over its use. These accusations have put OpenAI under immense pressure to ensure it complies with all relevant data privacy laws in its operating regions. As a result, OpenAI introduced a user opt-out form for its ChatGPT conversation history service.

I wonder if Privacy in AI differs from any other digital application. In my opinion, it is essential for all actors, regardless of whether they are involved in AI, to respect existing regulations related to data privacy. I am curious to know if some of you have different opinions.

For example, in Europe, the GDPR is a critical piece of legislation that aims to safeguard the privacy of individuals and their data. Similarly, in California, some laws protect privacy and data security, which companies must comply with. By respecting these laws and regulations, actors can ensure that they act in the best interests of their users and customers and build trust with them over the long term.

There is no “Big Brother” threat.

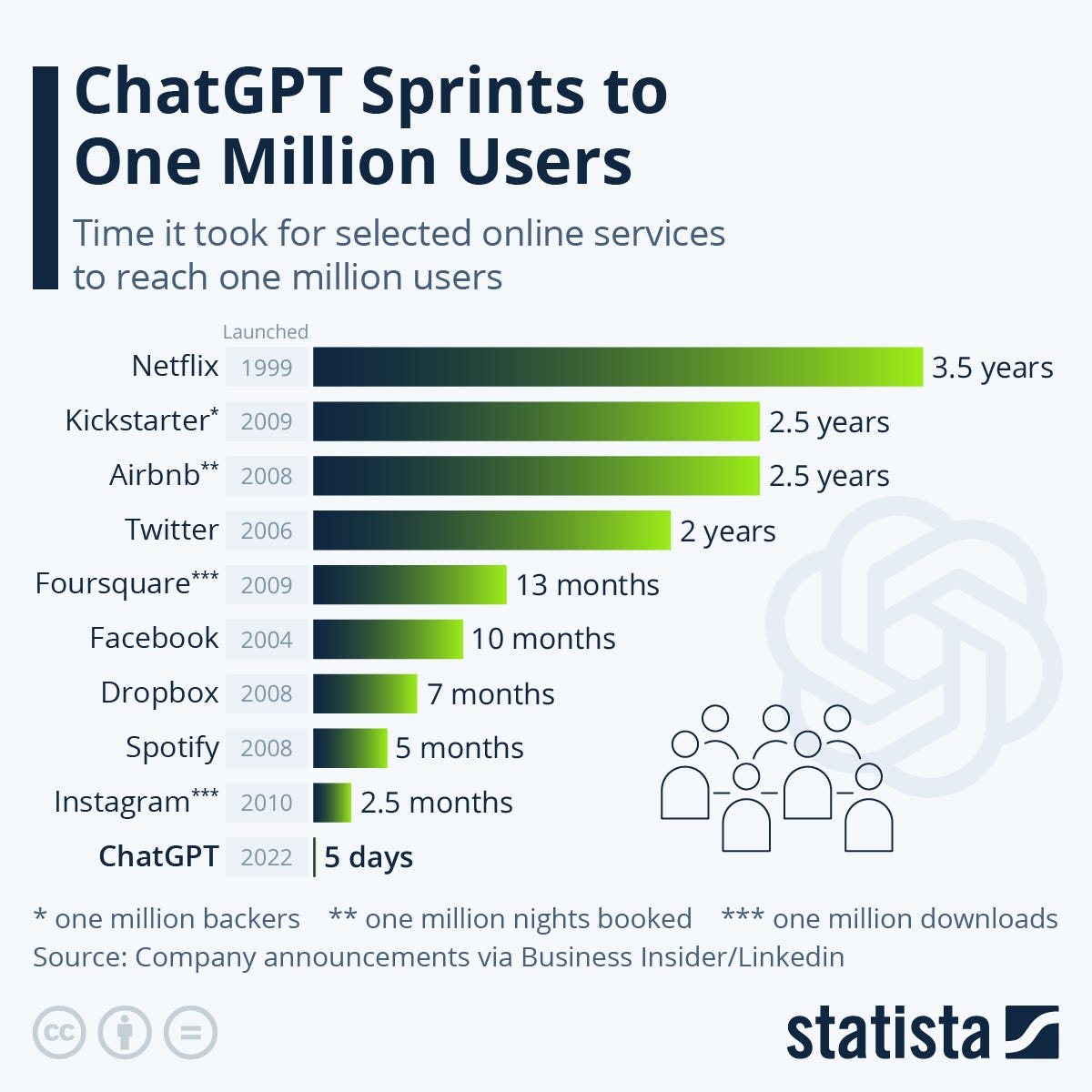

Everyone worldwide is rushing to test and interact with this new AI technology. ChatGPT has grown so much over the last few months that its success seems unstoppable. The chart below shows that it took just five days to reach 1 million users compared to the two years it took Twitter to reach that milestone.

On the media and over the Internet, for the last few months, ChatGPT equals Generative AI equals OpenAI. The whole generative AI technology was assimilated with the “only” application (e.g., ChatGPT) and its creator (e.g., OpenAI)

Regarding security, it created a false sentiment that only ChatGPT would have this stunning performance and that OpenAI has a massive advantage against its competitors. People might think that every individual or business would rely on ChatGPT as they rely today on Apple’s or Google’s solutions. That the one prominent actor is going to rule them.

I don’t want to deny the greatness of OpenAI, but I don’t think they are doing a unique technology. It is essential to acknowledge that emotional reactions to new technology are not uncommon. Feeling uncertainty and even fear is natural when faced with new and unfamiliar concepts. However, we must remember that just because something is the first of its kind does not mean it is the only one. Nobody should be afraid that “every data on the planet” will be taken over by OpenAI (or Microsoft) software and applications. The success is stunning, but the reality is that various actors master Generative AI and LLMs.

First, Google announces PaLM 2, a suite of foundational models for various devices. It has already integrated the model inside 25 Google products, including Gmail, Search, and Workspace.

Google's latest language model, PaLM 2, has improved multilingual, reasoning, and coding capabilities. It is trained in more than 100 languages, enhancing its ability to understand, generate, and translate nuanced text across various languages. PaLM2's dataset includes scientific papers and web pages with mathematical expressions, improving its logic, common sense reasoning, and mathematics capabilities. It was pre-trained on publicly available source code datasets, excelling at popular programming languages like Python and JavaScript and generating specialized code in languages like Prolog, Fortran, and Verilog.

OpenAI has no exclusivity of Generative AI technology. PaLM2 is impressive and will be as good - if not better - than ChatGPT. Moreover, various startups, including Anthropic, Midjourney, and others, are building new models.

But there is more…

“Big Brother” has no moat.

“We have no moat,” claims a leaked essay published lately on the internet.

Google and OpenAI have been material companies in the progress of AI technologies, but the reality is that they are not well-positioned to succeed in the AI "arms race.” Open-source communities have been making significant progress toward solving major challenges in AI. The essay highlights several examples, such as running foundation models on a Pixel 6 phone. OSS teams have built personalized AI that can be fine-tuned on a laptop in the evening.

While OpenAI or Google models are still slightly better in quality, open-source models are faster, more customizable, more private, and capable of doing more with fewer resources.

There is no secret sauce to LLMs and Generative AI models. People will not pay for a restricted model when free, unrestricted alternatives are comparable in quality. Giant models are slowing them down. Smaller and more efficient models will be more effective.

As every application has a database, every application will use an LLM. If their price point is good, it could be hosted by OpenAI or Google. But other vendors will use their homegrown models, integrating their proprietary dataset. As a result, we can expect an extensive offering of many applications running their own LLMs stack.

In conclusion, I don’t think there is a threat of being “locked” to a single vendor, even to OpenAI or Google.

Diving Deeper - AI Risk Management Framework

As the use of artificial intelligence (AI) systems becomes more widespread, so do the risks and potential associated threats. In response, a new set of frameworks is emerging to cover these new threats and help mitigate the risks associated with AI. These frameworks consider various factors, including the potential for AI systems to be hacked or manipulated and for AI systems to make biased or unfair decisions.

The US NIST published the Artificial Intelligence Risk Management Framework (AI RMF 1.0) in January 2023.

The document highlights several AI Risks:

Valid and Reliable

Safe

Secure and Resilient

Accountable and Transparent

Explainable and Interpretable

Privacy-Enhanced

Fair – with Harmful Bias Managed

Validation confirms that a specific intended use or application has been fulfilled with objective evidence. AI systems must be accurate, reliable, and well-generalized to different data and settings to avoid harmful AI risks and maintain trustworthiness.

Safety asks for tailored AI risk management based on context and severity. Risks that potentially risk severe injury or death require the most urgent prioritization and careful management. Safety considerations must be employed from the beginning of the lifecycle, starting with planning and design.

Reliability is an item's ability to perform as required without failure for a given time interval under given conditions. AI systems should operate correctly under expected conditions and over time, including the entire system's lifetime.

Trustworthy AI relies on accountability which requires transparency. Transparency refers to the availability of information about an AI system and its outputs to those interacting with it, even if they are unaware of doing so. Transparency provides access to appropriate information based on the AI lifecycle stage and the role or knowledge of the AI actors or users.

Explainability refers to understanding how AI systems work, while interpretability refers to understanding what the outputs of AI systems mean in the context of their intended purpose. Both explainability and interpretability help users of an AI system to understand the system's functionality.

As the NIST document states, “Privacy generally refers to the norms and practices that help to safeguard human autonomy, identity, and dignity.”

Lastly, AI fairness includes addressing harmful bias and discrimination through concerns for equality and equity. Defining standards of fairness can be complex and vary among cultures or applications.

The NIST AI Risk Management Framework asks all AI system stakeholders to take care of these risks alongside the lifecycle of AI applications.

Conclusion

With AI startups blooming and being backed by VCs, we expect to see even more of them in the coming months. Today's LLMs are fantastic tools and should be considered a new component of modern software architecture alongside backend containers, databases, and load balancers. As with any powerful tool, it has drawbacks and new threats.

Human societies learned to balance new technologies risks with standards and moral norms over time. It was valid for steel knives, motor cars, and nuclear power plants. Western societies also invented our social safety net, like retirement plans or unemployment insurance, during the 3rd revolution.

Product design transparency is the key to ensuring that customers trust the products they buy. It's material to be upfront about how a product is designed and what it's made of. To further enhance the security of a product, it's essential to implement a "shift left" approach and integrate security into the design process from the beginning. This concept is also known as "security by design." It involves performing a threat modeling exercise to identify possible security threats and vulnerabilities that attackers could exploit. Given the field’s complex and rapidly evolving nature, this approach to AI technology is critical. By incorporating security into the design process early on, we can ensure that our products are secure and trustworthy.

Great article

Congrat