Decoding AI in Cybersecurity: Navigating Supervised, Unsupervised Learning, and the Critical Need for Explainability.

What level of transparency should you expect from your detection AI systems?

Hello Cyber Builders!

In this week's publication, we delve deeper into the intricate world of AI algorithms, distinguishing the nuanced roles of supervised and unsupervised learning. But we won't stop there. We'll unravel the often-overlooked yet vital aspect of AI - explainability. Why is it crucial?

This post serves as the second part:

Part 1: We examined the Detection Engineer’s Perspective, emphasizing why 'Explainability Matters and Context is King.' (link)

Part 2: We're here to dissect 'Supervised vs Unsupervised Learning and the Importance of Explainability.'

Part 3: Stay tuned for practical 'Recommendations for Cyber Builders when integrating AI in the UX of their cybersecurity solutions and programs.'

I don’t want to spend too much time on machine learning definition, but here is a very short reminder and then we’ll go into the specifics for cybersecurity.

Supervised Learning: The Guided Approach

Supervised learning in AI is akin to a student learning under the guidance of a teacher. The 'teacher' (in this case, the training data) provides examples and labels to the 'student' (the AI algorithm). Just like a student learns to associate a specific answer with a question, the algorithm learns to predict outcomes based on the input data and its corresponding label.

Real-World Example: Email Spam Filters

Consider an email spam filter. It's trained with thousands of emails, each labeled as 'spam' or 'not spam.' Using techniques like Support Vector Machines (SVM) or Neural Networks, the algorithm learns to distinguish between spam and non-spam emails. When it encounters a new email, it uses what it's learned to classify it accurately.

Unsupervised Learning: Discovering Patterns Independently

On the other hand, unsupervised learning is like a student exploring a subject without direct supervision. The AI algorithm is given data but no explicit instructions on what to do with it. It must explore the data and identify patterns or structures on its own.

Real-World Example: Customer Segmentation in Marketing

A typical example is customer segmentation in marketing. The algorithm, perhaps using a method like K-means clustering, analyzes customer data and groups customers into clusters based on similarities in their buying behaviors or preferences. This is done without pre-labeled categories, allowing the algorithm to uncover natural groupings that may not have been initially apparent.

Application in Cybersecurity

As mentioned in the initial draft, these learning methods play a crucial role in cybersecurity. Both methodologies provide valuable tools in the arsenal of cybersecurity professionals.

Supervised learning algorithms might detect known threats by being trained on network traffic datasets labeled as 'malicious' or 'benign.' Meanwhile, unsupervised learning algorithms excel in identifying novel threats or unusual network behaviors by detecting anomalies or patterns that deviate from the norm without prior labeling.

Following our definition in the last post, supervised learning algorithms can generate alerts because they match something malicious on which they have been trained.

Unsupervised learning is best for detecting anomalies. If data points are coherently grouped in a cluster, outliers are something to look at. Outlier detection involves identifying data points that deviate significantly from most data. Consider the scenario of monitoring network traffic in an organization. An unsupervised learning algorithm, such as Isolation Forest or DBSCAN, is deployed to analyze the traffic patterns. These algorithms are adept at spotting outliers - unusual patterns or behaviors that differ from the norm. For instance, an unexpected surge in data transfer to an unfamiliar external server could be flagged as an outlier. This could indicate a potential data exfiltration attempt, which might otherwise go unnoticed amidst regular network activity.

Explainability in AI - Demystifying the “Detection-Making” Process

Explainability in AI sheds light on how AI systems arrive at their conclusions. In cybersecurity, where AI plays a crucial role in detecting threats and anomalies, explainability is not just a nice-to-have feature; it's a necessity for trust and reliability of Detection Engineers.

The core of explainability is to provide a clear, understandable rationale behind an AI system's decisions. This means articulating what data the AI analyzes, what patterns it recognizes, and why it's classifying certain behaviors or events as threats.

The Imperative of Explainability in Detection Engineering

In the specialized field of detection engineering, explainability transcends beyond mere transparency; it's about deeply understanding the 'why' and 'how' behind the outputs of machine learning classifiers. This level of insight is critical in determining how each output contributes to the overarching threat detection and response process.

For instance, if an AI system flags an unusual network traffic pattern as a potential security threat, explainability would involve detailing what aspects of the unusual traffic, how they differ from standard patterns, and why these differences are significant.

Explainability in this context involves dissecting and comprehending the decision-making process of ML classifiers. It's not just about knowing that a classifier has flagged an anomaly; it's about understanding the factors that influenced this decision.

The Role of Explainability and The Risk of 'Black Box' AI

The essence of explainability is twofold: firstly, it empowers detection engineers with the knowledge to trust and verify the AI's decisions. When a classifier identifies a potential threat, the engineer can understand the logic behind this determination, assessing its relevance and accuracy. Secondly, it enables engineers to fine-tune the AI system, enhancing its precision and reducing false positives. By understanding the 'how' of a classifier's output, engineers can make informed adjustments to ensure the system is attuned to the specific security landscape of their organization.

A significant challenge in AI-driven cybersecurity is the 'black box' problem, where the decision-making process of AI systems is opaque and not easily understood by humans. This lack of transparency can lead to distrust and hesitancy in accepting AI findings. If an AI system identifies an anomaly but cannot explain why it's considered weird, end-users may question the validity of the finding. Is it a genuine threat or just a false positive? Without clear explanations, even the most sophisticated AI detections can be ineffective, as users might ignore or second-guess crucial alerts.

The Layers of Explainability in AI Systems

Explainability in AI, especially within detection engineering, is not a one-size-fits-all concept. It can vary significantly depending on the system, the specific use case, and the security requirements. These varying levels of explainability range from basic interpretations of classifier scores to in-depth analyses of the machine-learning techniques and even code-level inspections.

Surface-Level Explainability: Classifier Scores

Explainability might involve explaining a classifier's score at the most basic level. An excellent example of this is an AI system that quantifies how unusual an administrative connection between two devices is over a given time. In this case, a percentage score could indicate the degree of anomaly detected in the connection, offering a straightforward, quantifiable metric that detection engineers can quickly interpret and act upon.

Mid-Level Explainability: Understanding Inputs and Techniques

Detection engineers must understand the inputs and machine learning techniques constituting a classifier. This level of explainability delves into the 'nuts and bolts' of the AI model, providing insights into what data is being analyzed and how the algorithm processes this data to arrive at a decision. For instance, understanding the features a classifier considers and how these features are weighted can give detection engineers a more nuanced view of the AI's decision-making process.

Advanced-Level Explainability: Code-Level Transparency

In certain scenarios, particularly in highly sensitive or classified environments, a more advanced level of explainability may be necessary. This could involve examining the actual implementation of a classifier at the code level. Such deep transparency is rare but crucial in contexts where a strong desire for AI transparency and explainability is paramount. It allows detection engineers and security experts to have an exhaustive understanding of the AI's functionality, leaving no room for ambiguity in its operations.

You can note this code-level transparency is what you get when you are using an IDS (e.g. Snort signatures) or SIEM ruleset (e.g Sigma signatures) or malware detection (e.g Yara signatures). So it is not so uncommon and should be considered by vendors.

Vendor’s Challenges of Achieving Explainable AI in Cybersecurity

The journey towards fully explainable AI in cybersecurity is fraught with challenges, each adding layers of complexity to this endeavor. These technical challenges involve legal and ethical considerations, impacting how AI systems are developed, deployed, and interpreted.

Intellectual Property Rights

A significant hurdle in achieving explainable AI is the protection of intellectual property rights. AI algorithms, culminating in extensive research and development efforts, are often closely guarded secrets. The need to protect these proprietary technologies can restrict the level of transparency and explainability that companies can provide. Protecting intellectual assets is a balancing act, especially in a field where transparency is crucial for trust and reliability.

Data Privacy and Security

Data privacy and security are paramount, mainly when AI systems process sensitive or personal information. Compliance with data protection regulations and ensuring that AI explainability does not compromise data security adds another dimension to the challenge. Cybersecurity firms must navigate these waters carefully, maintaining user trust while safeguarding the data they entrusted.

Illustrative Example: Darktrace's Approach

Darktrace, a leading cybersecurity AI entity, exemplifies how these challenges can be navigated. At the same time, grappling with the need to protect their IP and ensure data privacy, Darktrace endeavors to make its AI systems as explainable as possible. They achieve this through user-friendly interfaces, detailed visualizations, and clear communication about how their AI models function and why they make certain decisions. This approach enhances user trust and showcases the potential pathways to making AI in cybersecurity more transparent and understandable.

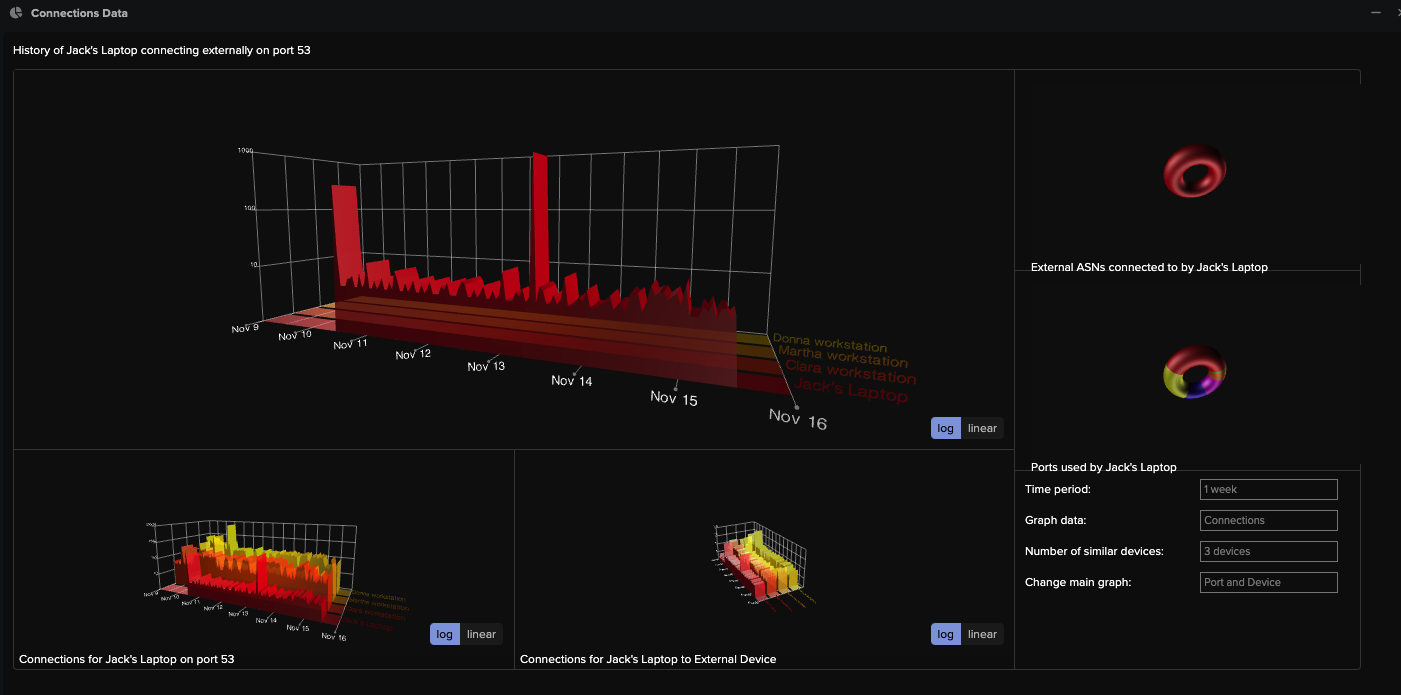

The lower third of the screenshot provides a clear view of the detection logic that led to the alert being raised. Two ML classifiers, namely 'rare domain' and 'DGA domain score', significantly triggered the alert.

Providing additional context on the classifier's output, such as access to raw data and visualizations, is crucial. This will help the analyst to understand the unusual activity and take necessary actions. It contrasts Jack’s device’s external connections on port 53 to the same behavior on similar devices (automatically detected).

Here's an example visualization that is linked to the above detection.

Conclusion

AI can potentially transform cybersecurity, but ensuring that AI systems are transparent, trustworthy, and interpretable is essential. We must build intelligent and interpretable AI solutions that advance cybersecurity while preserving transparency and trust.

Next week, I am zooming in on UX principles and best practices to integrate detection systems (whether AI or not) into applications. Subscribe to Cyber Builders, as you don’t want to miss it. Join the conversation and share your thoughts on the future of AI in cybersecurity.

In the meantime, I’ll appreciate your feedback and comments.

Laurent 💚