Is Bard the New Kid on the Cryptography Block?

I tested new Google's AI assistant - aka Bard - core knowledge of cryptography.

Hello again 👋

I am sending a second short post this week to update you on recent research I conducted 🧐. I evaluated the accuracy of Google Bard regarding cryptography knowledge, and the results are somewhat surprising! Let's take a closer look at them.

July 2023 Bard Updates

Bard is a chatbot released by Google in 2023. During the Google IO 2023 event, it received a significant update that utilized Google's newest large language model (LLM), Palm-2. Bard has unique capabilities, such as searching the web and exporting results over a single click to Google Docs.

In July 2023, Google opened Bard to EU countries and expanded its language support to 40 languages. Additionally, multi-modal features were added, allowing users to upload an image and ask about it during their conversation with the chatbot.

It was time for me to test this new technology! Last April 23, I tried GPT-3 and GPT-4 from OpenAI about their knowledge related to cryptography and cybersecurity. With that further access to Bard, I wanted to evaluate how much he knows about cybersecurity. Check out the April post (“GPT-4 remarkable performance to Certified Ethical Hacker exam”) here.

At the time, I was amazed about GPT-4 extensive knowledge, and doing the same tests for Bard and the Google Palm2 models was exciting. Today, I want to share my first steps: manual testing through the web interfaces with the same basic cryptography questions I’d done with ChatGPT in February.

I call them “basic” because you should see them as “knowledge retrieval.” The questions are not asking the student (or AI, for instance) to “reason.” You would find them in “Introduction to Cryptography” in colleges worldwide. For a student, these questions ask to “retrieve” the correct answer from a “memory.” For an AI, it should be part of the model and knowledge encoded during initial training or fine-tuning.

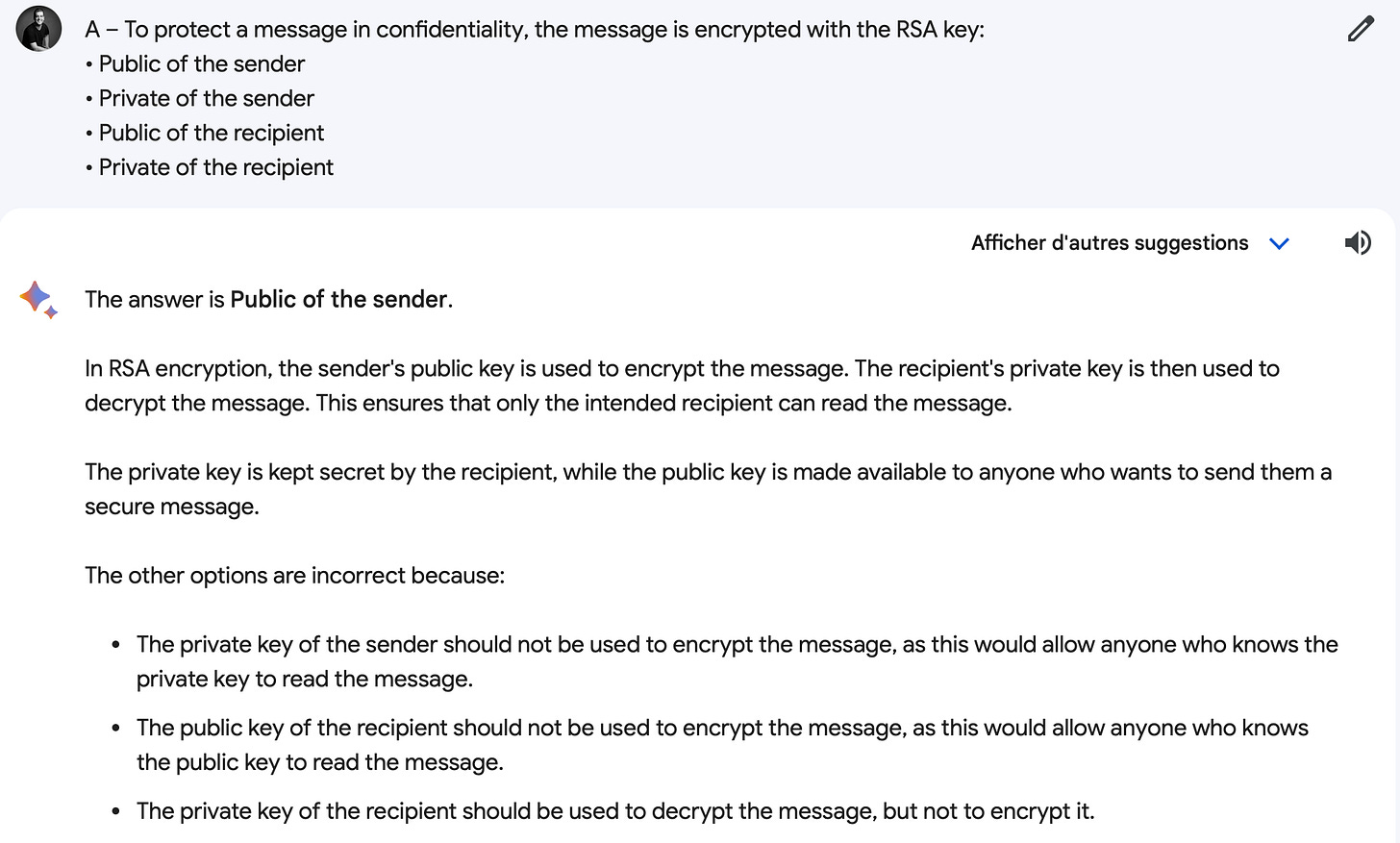

Basic question… Big mistake

The more concerning issue I found is the big mistake Bard has made regarding using asymmetric encryption to protect a confidential message. In my two-day Cryptography Introduction Course, I aim to give students basic ideas about using algorithms and keys to deliver security services without confusing them with secret keys, private keys, Hash algorithms, etc. I was deeply disappointed by Bard's big mistake.

To send a confidential message, you encrypt with the recipient key. He will decrypt with his private key.

To sign a message and so provide authenticity and integrity, you encrypt the hash of the message with your private key. The recipient decrypt it with your public key and compare the resulting value with the hash of the received message.

Bard is not searching for updated knowledge.

I was expecting Bard to get more fresh answers and be able to search the web. Bard should be capable of leveraging the vast Google web index. Previously, I found a limitation in ChatGPT as its cutoff date was late 2021, and it was not connected to the web, so I expected more from Bard.

But Bard also failed the test of getting fresher data.

It is pretty concerning that Google Bard, an AI assistant developed by one of the largest technology companies in the world, provided incorrect answers to basic cryptography questions. It is even more unacceptable, considering the correct answers can be easily found through a simple Google search. As a result, the reliability of Google Bard's knowledge and capabilities may be questioned.

Lastly, it does not have a localized answer. A “Yes Card” in France is a smart card that always answers “Yes” whatever the PIN code provides. It was popularized in the late 90s by a court case.

See the 🇫🇷 details on Wikipedia https://fr.wikipedia.org/wiki/YesCard.

General impressions are still excellent.

I must say that the overall impression remains very good. After taking the entire "Crypto Intro" college exam, I would rate Bard's answers a 16 out of 20. Most of the answers are correct, and the reasoning provided by the agent is well-written, with the necessary details. There are big mistakes but few of them.

I want to note that some answers were better from Bard than ChatGPT.

For example, many of my students and ChatGPT mistakenly swapped the key's size and the block's size for symmetric encryption. The size of the block could have security implications due to the anniversary paradox. Bard provided accurate answers and explained the difference between block and critical size.

Another question asked to explain the cipher switch-off TLS negotiation that would be provided by a web browser or opened in OpenSSL logs. ChatGPT could decode part of it, but Bard did a better job, including understanding that ECDH is an elliptic curve Diffie-Hellman exchange. Still, there are some misunderstandings in his answer.

Conclusion

After analyzing the provided information, Bard's reliability may be somewhat questionable. While it is true that he has done a better-than-average job when answering crypto-related questions, the fact that he could not provide 100% correct answers on specific topics may be cause for concern.

At a time when AI is becoming widely used, especially LLMs being integrated into day-to-day applications, it raises some questions. Startups are pioneering the topic, showing how easy it is to inject “fake facts” into an LLM. Check out this piece, from Mithril Security, for more on this topic.

I think it is essential to thoroughly evaluate Bard and other LLM performances and consider the potential consequences of any inaccuracies. As our world increasingly relies on technology, the need for strong cybersecurity measures has never been more pressing. However, implementing a model without evaluating its accuracy can lead to disastrous consequences. That's why the cybersecurity industry should invest more in projects that focus on assessing the accuracy of these models, starting with the cybersecurity knowledge that's built into them.

I’ll be happy to have a conversation around this. Feel free to contact me to see how we can set up something.

What an exciting time: AI is a new technology with massive capacities that raises massive security issues. Let’s work on it!

Next week I am publishing the last post from my SMB series. Stay tuned!

Laurent