The Evolution of AI in Cybersecurity: Benchmarking GPT-4, Mistral Large2 and Smaller Models

GPT-4's Early Success in Cybersecurity ; Smaller Models helps for Sensitive Data ; Mistral Large2 rules them all!

Hello Cyber Builders 🖖

Last year, I published one of my first analyses of how well AI models, specifically GPT-4, could solve cybersecurity questions. It was a benchmark to see how much knowledge GPT-4 had on a crucial cybersecurity exam, the Certified Ethical Hacker (CEH) certification. The results were impressive—GPT-4, the best AI model at the time, showed remarkable capabilities across various exam sections.

You can check out the article here.

Please do me a favor and share this article with five contacts around you. It will help spread my content, and the more readers I have, the more motivated I am to keep posting new articles for Cyber Builders.

GPT-4's Remarkable Performance in CEH Exam

This week while I am attending RSA Conference in « beautiful San Francisco,» I wanted to share some exciting research I’ve done in the past few weeks.

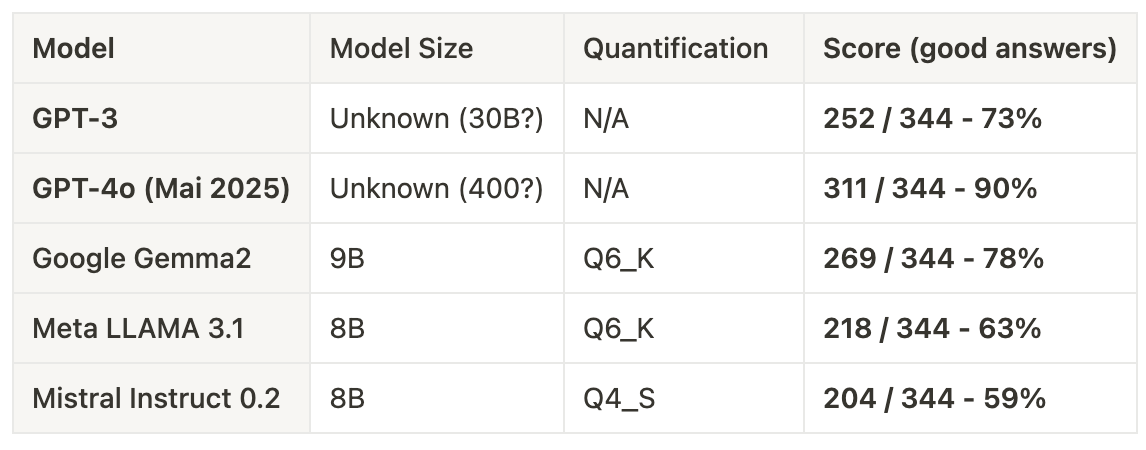

This week, I’ll share the latest benchmarks I've conducted using the same dataset. But this time, I pushed the envelope further by comparing GPT-4 with other models, including smaller ones that have launched over the past year.

And I am disclosing a new winner in the benchmark! Mistral AI Large2

GPT-4's Early Success in Cybersecurity

Understanding the CEH Exam

The Certified Ethical Hacker (CEH) exam is a rigorous test covering various cybersecurity topics, including legal frameworks, governance, attack schemes, cryptography, and networking. What makes it so challenging is that no one is expected to score perfectly. In fact, the pass mark hovers around 70%, meaning even seasoned professionals aren't required to know every answer.

This made the CEH exam a pretty good benchmark for testing how much cybersecurity knowledge GPT-4 possessed. Last year, I ran GPT-4 through a series of questions in different sections of the CEH exam, and the results were stunning then.

GPT-4’s Performance in 2023

Despite the depth and complexity of the subject matter, GPT-4 demonstrated remarkable knowledge, achieving results comparable to—or better than—those of many professionals in the field.

GPT-4o slightly pushes limits

Fast-forward to this year and things have advanced. OpenAI published several upgrades. With the last GPT-4o upgrade, I reran the same benchmark. To be honest, I am still astonished to see that a general-purpose AI can master such a specialized domain as cybersecurity. The improvements are significant, even if they are just a few percent.

Real-world Use Cases

This brings up some exciting possibilities—AI can serve as a tutor, mentor, or assistant for cybersecurity professionals or students. Imagine having a real-time conversation with an AI model that helps you solve exercises, explains cryptography principles, or assists you in understanding complex attack vectors.

I covered some of these use cases in a previous post on AI assistants, but it’s worth highlighting here again: AI's capacity to provide on-demand training is a game-changer. GPT-4 can act as a virtual mentor. It does not judge you, and you can ask any questions.

Privacy and Cost Considerations

The Dilemma of Sensitive Data

GPT-4 undeniably possesses immense power, but concerns about privacy and cost are significant, particularly in cybersecurity, where sensitive information is frequently at stake.

Would you like all your security data to an external AI? Even this AI would be very knowledgeable.

Depending on online models like GPT -4 or Gemini may not be ideal in situations involving suspected cyber attacks or the handling of sensitive data such as personally identifiable information (PII) or logs. Moreover, the regular use of large AI models can incur considerable costs, making them less feasible for everyday use.

As I discussed in "Centralizing: The Three Driving Forces Shaping the Future of AI,” I believe smaller models and Open source will help Cyber Builders use AI in sensitive cybersecurity contexts.

Over the past year, I have rigorously benchmarked several smaller models to assess their effectiveness in handling cybersecurity tasks compared to GPT-4.

Benchmarking Smaller Models

I've tested a range of models over the last year, and while they’re improving, none have reached the level of GPT-4

Smaller models do have their place, though. They offer more control, are cost-effective, can be run on your own laptop, and are safer to use when privacy is a concern. However, their performance, especially in cybersecurity-related tasks, lags behind the best online models.

But I can bet you can fine-tune or create LORA adapters to have your own specialized cybersecurity models.

Mistral Large2 is On Par with GPT4 for Cyber Security Knowledge

Mistral Large2 has demonstrated remarkable capabilities, particularly in cybersecurity knowledge, performing on par with GPT models achieved just a year ago.

This highlights the rapid advancements in the Generative AI and LLMs field and underscores Mistral Large2's competitiveness in understanding and addressing complex cybersecurity challenges.

In both cases (GPT-4 or Large2), the mistakes are spread across various categories, such as Reconnaissance, Scanning, Sniffing, Web, Mobile, Wireless, Malware, and Cryptography.

These large 2024 models have comprehensive cybersecurity knowledge.

Even better news is that you can run this model on various cloud providers, ensuring that your data remains safe and private. Check out the Mistral announcement post.

Conclusion

AI models like GPT-4 have already demonstrated their potential in mastering a highly specialized field like cybersecurity. However, smaller models offer an alternative for those concerned about privacy and cost.

Hey Cyber Builders, what do you think? Please give me comments below 👇

Laurent 💚