Building Effective LLM-Based Apps: A Cybersecurity Chatbot

Building a Cybersecurity Chatbot in Minutes, Exploring its Limitations, Improving Accuracy with Trusted Data.

Hello Cyber Builders ✋🏽

This week, let’s build a cybersecurity LLM-based application. I am going to dive deep into how to build a chatbot and how to get some good outputs, looking at how to prevent hallucinations and other issues. I’ll also explain how to enhance answers with your curated and accurate.

This post is part of a series that explores the intersection of AI and Cyber. In this series, we will highlight various use cases, examine the risks associated with AI, and provide recommendations for organizations looking to implement AI-based solutions.

Previous Posts

🔗 Post 1 - AI Meets Cybersecurity: Understanding LLM-based Apps: A Large Potential, Still Emerging, but a Profound New Way of Building Apps

🔗 Post 2 - A new UX with AI: LLMs are a Frontend Technology: Halo effect and Reasoning, NVIDIA PoV, History of UIs, and 3 Takeaways on AI and UX

🔗 Post 3 - AI and Cybersecurity: An In-Depth Look at LLMs in SaaS Software. Using fictional HR software to understand the value and risks of using Generative AI in SaaS apps. A simple threat model to reflect on a practical use case.

There are several types of generative AI applications today. The first type of application that we all know, since we all have used ChatGPT, is the chatbot. I am zooming in on this use case in this post. I am also building a “RAG” chatbot.

A cybersecurity chatbot…

Well, I guess I don’t need to define or explain what a chatbot is. You used ChatGPT, so I think you know it. A chatbot has a free and open interface with which you can converse. You ask a question in natural language, and through this chat interface, the LLM model behind it answers your questions.

Creating this interface is simple from a frontend and UX point of view. There are multiple open-source frameworks to build your apps.

Then, you need an LLM model. The LLM models need to be “pre-trained”, meaning it has undergone training on trillions of words (or subwords that are called “tokens”), and then it have been fine-tuned with a set of instructions. Instructions are a set of questions and answers that are expected. For example, to improve answers on scientific knowledge, we can fine-tune our models on a set of science Q&A. Look at this Github repo, which contains a list of many instructions. This type of application is incredibly straightforward to implement today due to the many practical solutions available. One option is to utilize pre-trained models through APIs such as OpenAI or Anthropic. Another option is leveraging open-source models like LLAMA, developed by Facebook, and self-host it in your local data center or AWS account.

… In Minutes

For example, regarding cyber security, we can imagine a chatbot acting as a consultant. With the following prompt, we will obtain better-quality answers than without a system prompt.

👉🏼 System Prompt: You are a cybersecurity expert. Your role is to assist IT managers in implementing best practices within their company. You will ensure that you understand the context in which you operate, whether it is a large, medium, or small company, and whether it has a large or small IT team.

Once you have grasped the context, you will answer the user's questions, focusing on three aspects.

First, you will adopt a risk-based approach, highlighting the potential risks that the user faces.

Then, you will provide practical recommendations for implementing cybersecurity best practices.

Finally, you will provide the user with examples of attacks that have caused damage and had an impact when these best practices were not followed.In the picture below, I implemented it using the OpenAI Platform:

In this mini chatbot example, I give instructions to the OpenAI GPT-4 model and ask it to follow them.

Imagine having an intern to whom you want to assign a task. You know they are perfectly capable of completing it, but they need you to guide them in this work, providing them with a framework, structure, and detailed understanding of what they will do. It's the same with this system prompt.

In a system prompt, you provide instructions on

its objective,

What are the steps it must follow,

In which style should the answer be.

As the LLM model has been fine-tuned to follow these instructions, it will follow your prompt all through the chat between the agent and the user. It is why many AI experts keep repeating you can “program” new AI applications, as the system prompt is the “code” of your mini-apps.

I encourage you to open accounts on OpenAI or other provider and play with their playground. Even if you are not a developer, you will be astonished to see how easy it is to achieve results in minutes.

A bot beginners penetration tester

Let’s continue this and see if I can use it to build a bot for everyone joining the cybersecurity community each year. As I wrote earlier, I sincerely think the cybersecurity community should be more collaborative and open (see Cyber Builders: A Collaborative Approach to Cybersecurity and Your Roadmap to Becoming a Cybersecurity Pro)

Let’s consider the following prompt.

You are a cybersecurity expert.

Your role is to explain the output of security tools.

Your user is a rookie security consultant.

He/she needs help and needs to be guided.

You will:

- Explain the logs you received

- Highlight the most important information

- Guide what would be the following actions

Now imagine I want to know more about Bulbsecurity.com, the company of Georgia Weidman, author of “Penetration Testing Handbook.” Let's use the example she is taking in her book.

root@kali:~# theharvester -d bulbsecurity.com -l 500 -ball

---

-

- | |_| |__ ___ /\\\\ /\\\\__ _ _ ____ _____ __| | ___ _ __ *

- | | ' \\\\ / _ \\\\ / /_/ / _` | '__\\\\ \\\\ / / _ \\\\/ __| '__| |

- | | | | | __/ / __ \\\\ (_| | | \\\\ V / /\\\\ \\\\ || __/ | *

- |_| |_|\\\\___|_\\\\/ ||_|\\\\__,_|_| \\\\_/ \\\\_/\\\\_/\\\\__\\\\___|_| |

-

- TheHarvester Ver. 2.2a *

- Coded by Christian Martorella *

- Edge-Security Research *

- cmartorella@edge-security.com *

---

## Full harvest..

[-] Searching in Google..

Searching 0 results...

Searching 100 results...

Searching 200 results...

Searching 300 results...

--snip--

[+] Emails found:

## georgia@bulbsecurity.com

[+] Hosts found in search engines:

50.63.212.1:www.bulbsecurity.com

What does GPT-4 think about this, with the system prompt above?

The output provided is from the execution of `theharvester` tool in Kali Linux.

This tool is typically used for reconnaissance, helping to gather information

about a specific domain. In this case, the target domain is `bulbsecurity.com`.

This is what the results mean:

- **Command**: `theharvester -d bulbsecurity.com -l 500 -b all`

Here, `-d` specifies the target domain, `-l` indicates the limit for

search results, and `-b` is for selecting the data source.

- **"Full harvest"**: Means it's starting a comprehensive scan, searching in

all data sources specified.

- **"Searching in Google"**: The tool is now attempting to locate data (e.g.,

emails, hostnames) related to the target domain via Google.

This search is quite extensive, seeking 100, 200, and 300 results, respectively.

- **"Emails found"**: An email address (`georgia@bulbsecurity.com`) was

discovered that's linked to the target domain.

- **"Hosts found in search engines"**: A host, identified by the

IP address `50.63.212.1` and linked to `www.bulbsecurity.com`, has been found.

In terms of immediate action:

- It might be worth exploring the identified IP address for potential

vulnerabilities using various security tools like Nmap or Nessus.

Cross-referencing this IP with Shodan (a search engine for internet-connected

devices) could also reveal useful information.

- The discovered email could potentially be used for social engineering or

spear-phishing attacks, should that align with the scope of your investigation.

Remember, though, always respect privacy and legal boundaries.

Always obtain the necessary permissions before performing these kinds of assessments.

Pretty neat, doesn’t it?

Easy to build but quite limited

While using a system prompt to guide the LLM-based application can be a powerful approach, it is important to acknowledge its limitations. The inherent knowledge of the model is based on its pre-training database, and it is limited to what it has learned during that training process. This means that you may encounter situations where the model doesn't have the specific knowledge or information you are seeking.

In the context of cybersecurity, staying up to date with the latest threats and vulnerabilities is crucial. However, the model's knowledge is based on a cut-off date and cannot be continuously updated with real-time information. This limitation restricts its ability to provide accurate and timely insights on emerging threats.

Another challenge is the risk of hallucination. If you ask the model a question that falls outside its encoded knowledge, it may attempt to generate an answer that sounds convincing but might not be accurate or reliable. This risk of hallucination is particularly concerning in the field of cybersecurity, where precise and reliable information is essential.

Therefore, while the system prompt approach can be valuable, it is important to recognize its limitations and enhance it with other sources of information and expertise to ensure the accuracy and reliability of the insights generated.

Entering a Retrieval Augmented Generation (RAG)

RAG LLM-based applications can solve these issues. RAG is a type of application built on top of an LLM. The Prompt Engineering Guide defines it as follows:

General-purpose language models can be fine-tuned to achieve several common tasks such as sentiment analysis and named entity recognition. These tasks generally don't require additional background knowledge.

For more complex and knowledge-intensive tasks, it's possible to build a language model-based system that accesses external knowledge sources to complete tasks. This enables more factual consistency, improves the reliability of the generated responses, and helps to mitigate the problem of "hallucination."

Meta AI researchers introduced a method called Retrieval Augmented Generation (RAG) to address such knowledge-intensive tasks. RAG combines an information retrieval component with a text generator model. RAG can be fine-tuned, and its internal knowledge can be modified in an efficient manner and without needing retraining of the entire model

Let’s take the example of building a Threat Intelligence RAG bot.

You have subscribed to 100+ newsfeeds from the experts you follow on Twitter, the blog you are reading, or the companies you are paying to get an up-to-date feed. Tracking all this data is a daunting task, and most of the time, if the articles are indeed interesting, they are out of context for your particular issues. When you are asked to apply these Threat Intelligence data to your own business and IT activities, it is hard and time-consuming.

How can we combine these data feeds and the power of LLMs?

That’s what we are going to see now, using RAG. The following diagram described it

Let’s walk through how it works:

First the user send her question.

Then, the application (here “backend”) use a Vector Database to search relevant Threat Intelligence articles based on the context. It is called a “Vector” because each word is represented by a multi dimensional vector. To put it simply, it is a mathematical space where words of the same meaning are close (”computer” and “apple” are closer than “computer” and “orange”). As in Vector Database you can put meta data, we can store also the source of the information (ex: URLs).

Then, it is sending to the LLM the combo of the system prompt, the retrieved accurate chunks of text, their source URLs and the user question.

The LLM will then answer using a bit of his internal knowledge but a lot of the content that has been provided. In that case you are not looking that much as LLM as a knowledge system but more for their context understanding, summarization capacities and formatting.

Finaly, the answer is provided to the end user.

I have implemented this on a DB of 10 000+ articles I have stored over the last 2 years.

Let’s have a look at the results and compare with ChatGPT (free)

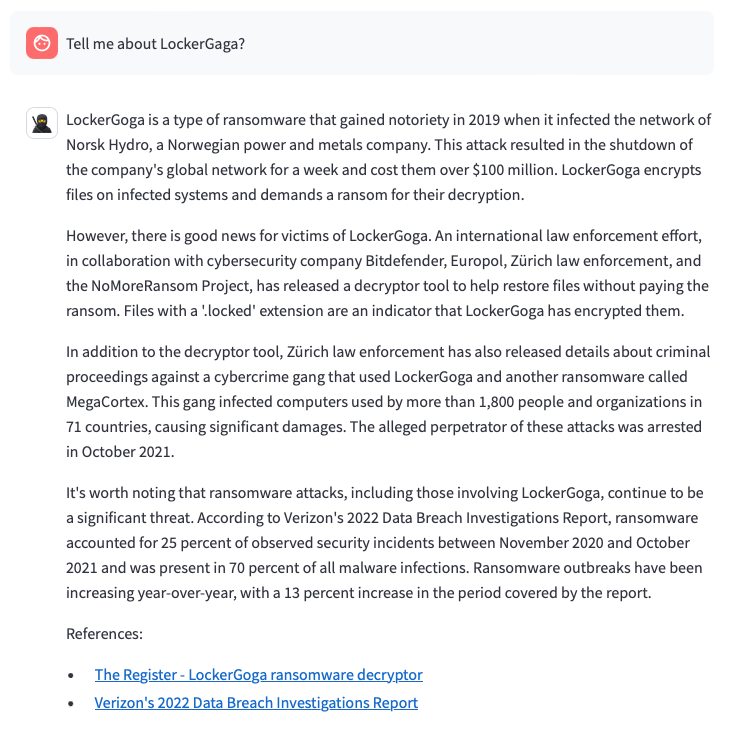

As you can see, Chat GPT (here without any system prompt), understand that it might be related to a cybersecurity issue, but do not have many clue. It recognizes its cut-off date of Sept 21 could be an issue.

Now look at what my own system is giving:

Woo. Much better. First it has understood that I am doing a typo (its LockerGoga not LockerGaga). Then, it provided me a detailed answer with the history of the attacks, its impact and what happened over the last years. Finally, it provides me references, with full URLs, I can check to know more.

If need I can continue to chat and get more informatio

Conclusion

In conclusion, the potential of LLM-based applications in the field of cybersecurity is immense. From creating a simple chatbot to a more complex Retrieval Augmented Generation (RAG) system, these applications can provide valuable insights and solutions to cybersecurity challenges. However, it's important to remember that while these applications can be powerful tools, they are not without their limitations. The inherent knowledge of the model is based on its pre-training database, and it is limited to what it has learned during that training process. Therefore, it's crucial to enhance these applications with other sources of information and expertise to ensure the accuracy and reliability of the insights generated.

As we continue to explore the intersection of AI and Cyber, we will undoubtedly uncover more innovative ways to leverage these technologies. The future of cybersecurity is exciting, and we look forward to sharing more insights and developments in this space. Stay tuned for more posts in this series, and don't hesitate to reach out if you have any questions or ideas.

Let's continue to build and collaborate for a more secure digital world together.

Laurent 💚

PS: do me a favor, if you like this post, hit the “heart” icon in the bottom 😉